DiLoCo (Distributed Low-Communication) represents a significant breakthrough in the field of artificial intelligence, particularly for training large language models (LLMs) across distributed computing environments. Developed by Google DeepMind researchers, this innovative approach has transformed how AI models can be trained efficiently across geographically dispersed and loosely connected computing resources.

The algorithm’s ability to drastically reduce communication requirements while maintaining performance has opened new possibilities for democratizing access to advanced AI development.

Origins and Fundamental Concepts of DiLoCo

DiLoCo emerged from Google DeepMind’s research efforts to address the substantial challenges associated with training large language models. Traditional approaches to training LLMs require tightly interconnected accelerators with devices exchanging gradients and other intermediate states at each optimization step. This requirement creates significant infrastructure challenges, as building and maintaining a single computing cluster with many accelerators is both difficult and expensive.

The paper “DiLoCo: Distributed Low-Communication Training of Language Models,” authored by Arthur Douillard, Qixuan Feng, Andrei Rusu, Rachita Chhaparia, Yani Donchev, Adhi Kuncoro, Marc’aurelio Ranzato, Arthur Szlam, and Jiajun Shen, introduced this groundbreaking approach to the AI community.

At its core, DiLoCo draws inspiration from Federated Learning principles, presenting itself as a variant of the widely recognized Federated Averaging (FedAvg) algorithm. However, DiLoCo introduces significant modifications by incorporating elements akin to the FedOpt algorithm. The innovation lies in its strategic use of AdamW as the inner optimizer and Nesterov Momentum as the outer optimizer, creating an ingenious combination that effectively tackles the challenges inherent in conventional training paradigms.

This approach enables training of language models on islands of devices that are poorly connected, making it possible to leverage several computing clusters each hosting a smaller number of devices rather than requiring a single large, tightly-connected cluster.

The foundational strength of DiLoCo resides in its bi-level federated optimization paradigm. This architecture allows for significant reduction in communication requirements while maintaining model performance comparable to traditional synchronous methods. By enabling distributed training across loosely connected hardware, DiLoCo represents a fundamental shift in how we approach large-scale AI model development.

Technical Architecture and Implementation

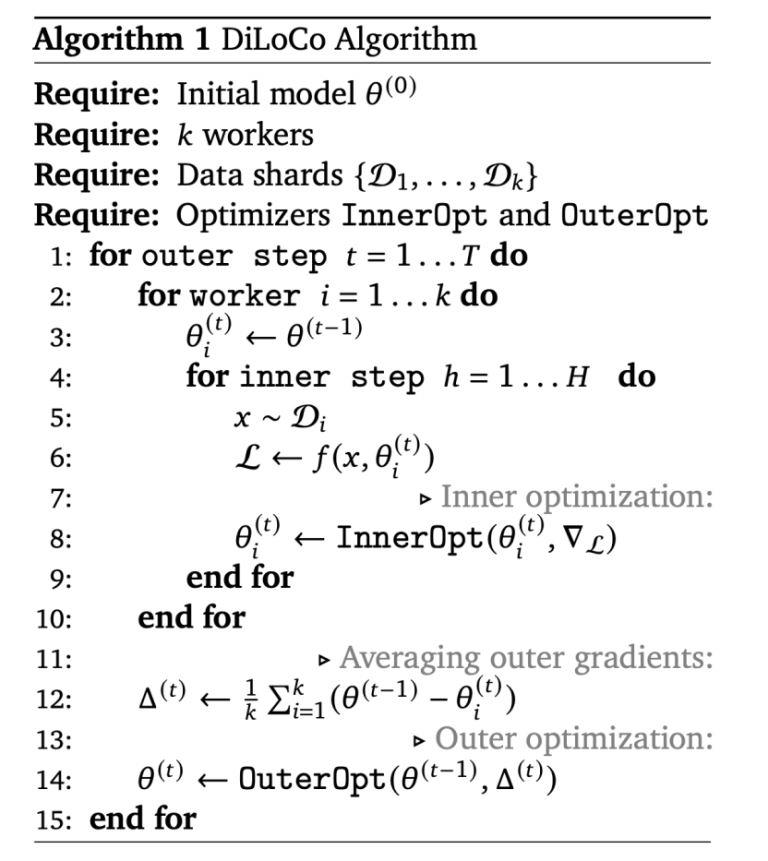

DiLoCo’s technical implementation revolves around a sophisticated inner-outer optimization algorithm that balances local computation with global synchronization. This approach allows both local and global updates to occur at different frequencies, optimizing for computational efficiency and communication constraints.

The training process begins by replicating a pretrained model θ(0) multiple times across different worker nodes. Each worker independently trains its model replica on an individual data shard for H steps using the inner optimizer (AdamW). This local training phase proceeds without any communication between workers.

Following this independent training period, workers then communicate their updates to each other in the outer optimization phase. These updates, referred to as “outer gradients,” are aggregated via an all-reduce operation into a single update, which is then applied using the outer optimizer (Nesterov Momentum) to the current global parameters.

More specifically, during the inner optimization phase, each worker independently executes the AdamW optimizer on their local data starting from the current values of the global parameters. This process continues for multiple steps without any inter-worker communication. After completing the preset number of inner optimization steps (typically between 50-500), all workers communicate their accumulated updates to each other.

The algorithmic implementation can be represented as follows: local replicas perform, in parallel, H steps of the inner optimizer on different subsets of the data. Every H steps, each replica computes an outer gradient (a delta in the parameter space) and communicates it to all other replicas.

The communication between workers can be performed either through a central parameter server or through direct communication between workers (e.g., with a ring all-reduce). This results in each worker obtaining the average of all outer gradients, which is then applied to the outer parameters using the Nesterov Momentum optimizer. This updated global model then becomes the starting point for the next round of inner optimization. This cyclic process repeats throughout the training process, enabling each replica’s training in distinct global locations using various accelerators.\

Advantages and Performance Characteristics

DiLoCo introduces several significant advantages over traditional distributed training approaches, with reduced communication requirements being perhaps the most notable. Standard data-parallel distributed training requires workers to exchange gradients at every optimization step, creating substantial communication overhead, particularly when workers are geographically dispersed or connected through low-bandwidth channels.

In contrast, DiLoCo dramatically reduces this communication burden by requiring exchanges only once every H steps (typically around 500 steps). This approach reduces the total communication requirements by a factor equal to the number of inner optimization steps compared to standard data-parallel approaches. In practical experiments using the C4 dataset, DeepMind researchers demonstrated that DiLoCo with eight workers achieves performance on par with fully synchronous optimization while reducing communication by an astounding 500 times.

The algorithm exhibits exceptional resilience to variations in data distribution among workers, making it robust to potential data imbalances or heterogeneity. Furthermore, DiLoCo demonstrates remarkable adaptability to changing resource availability during training. It can seamlessly leverage resources that become available mid-training and remains robust when certain resources become unavailable over time. This flexibility makes DiLoCo particularly valuable in real-world scenarios where computing resources may fluctuate.

DiLoCo’s architecture provides three fundamental advantages that transform distributed AI training. First, it has limited co-location requirements, where each worker necessitates co-located devices, yet the total number required is notably smaller than traditional approaches, easing logistical complexities. Second, the reduced communication frequency means workers need to synchronize only at intervals of H steps, significantly curbing communication overhead. Third, the algorithm supports device heterogeneity, allowing different clusters to operate using diverse device types, offering unparalleled flexibility in hardware selection.

OpenDiLoCo: Open-Source Implementation and Scaling

A significant development in the DiLoCo ecosystem came in July 2024 when Prime Intellect AI released OpenDiLoCo, an open-source implementation and scaling of DeepMind’s DiLoCo method. This release represents a major step toward democratizing access to advanced AI training capabilities by enabling collaborative model development across globally distributed hardware.

OpenDiLoCo provides a reproducible implementation of DeepMind’s DiLoCo experiments within a scalable, decentralized training framework. The implementation has demonstrated impressive effectiveness by successfully training a model across two continents and three countries while maintaining 90-95% compute utilization. Furthermore, Prime Intellect has scaled DiLoCo to three times the size of the original work, demonstrating its effectiveness for billion-parameter models.

The OpenDiLoCo implementation is built on top of the Hivemind library. Instead of using torch.distributed for worker communication, Hivemind utilizes a distributed hash table (DHT) spread across each worker to communicate metadata and synchronize them. This DHT is implemented using the open-source libp2p project. OpenDiLoCo employs Hivemind for inter-node communication between DiLoCo workers and PyTorch FSDP (Fully Sharded Data Parallel) for intra-node communication within DiLoCo Workers, creating a sophisticated two-tier communication strategy.

The release of OpenDiLoCo under an open-source license (available at https://github.com/PrimeIntellect-ai/OpenDiLoCo) reflects a commitment to fostering collaboration in this promising research direction to democratize AI. This open approach allows a broader community of researchers and developers to contribute to and benefit from advancements in distributed AI training methodologies.

Recent Research and Optimizations

The research community has continued to build upon and optimize the original DiLoCo approach. One notable advancement is the development of “Eager Updates For Overlapped Communication and Computation” in distributed training. This research explores methods to further optimize the training process by overlapping communication and computation phases, thereby reducing overall training time.

Two specific variants have emerged from this research: “Streaming DiLoCo with 1-inner-step overlap” and “Streaming DiLoCo with 1-outer-step overlap.” These approaches aim to minimize idle time during the training process by strategically overlapping different phases of the optimization process. Performance comparisons between these variants and standard data-parallel training show significant improvements in efficiency while maintaining comparable model quality.

A detailed comparison across different token budgets (25B, 100B, and 250B) demonstrates that both streaming DiLoCo variants achieve comparable evaluation loss and performance metrics on benchmarks like HellaSwag, Piqa, and Arc Easy compared to data-parallel approaches. However, they do so with dramatically reduced communication requirements, particularly at higher token budgets.

For example, at 250B tokens, standard data-parallel training requires approximately 1097 hours with a 1 Gbit/s connection, while streaming DiLoCo approaches require only 6.75-8.75 hours, representing a reduction of over 99% in communication time.

Applications and Implications for AI Development

The development and advancement of DiLoCo have profound implications for the AI research and development landscape. By dramatically reducing the communication requirements for distributed training, DiLoCo makes it feasible to train large language models across geographically dispersed computing resources. This capability opens the door to more collaborative and democratic approaches to AI development, potentially reducing the concentration of AI development capabilities among a few organizations with access to massive computing clusters.

In the context of large language models, which have revolutionized AI but traditionally required massive, centralized compute clusters, DiLoCo provides a pathway to broader participation in AI development. This democratization has the potential to accelerate innovation by allowing more diverse participants to contribute to the advancement of AI technologies.

The approach is particularly valuable in scenarios where high-bandwidth connections between all computing resources are not available or are prohibitively expensive. By enabling effective training across poorly connected devices, DiLoCo makes it possible to leverage a wider range of computing resources, potentially including edge devices or distributed data centers with limited interconnection bandwidth.

Beyond technical applications, DiLoCo’s approach has implications for business applications as well. In industries like B2B marketing automation, DiLoCo’s innovations could enable more efficient scaling of AI-powered marketing solutions by simplifying language model training and reducing infrastructure requirements.

Future Directions and Potential Developments

As DiLoCo continues to evolve, several promising research directions are emerging. One area of active development is further optimization of the balance between local and global updates, potentially through adaptive approaches that dynamically adjust the frequency of communication based on model convergence metrics or network conditions.

Another promising direction is the integration of DiLoCo with other distributed training techniques, such as model parallelism or pipeline parallelism, to create hybrid approaches that address multiple scaling challenges simultaneously. The combination of these techniques could enable training of even larger models with more complex architectures across more diverse computing environments.

The open-source availability of implementations like OpenDiLoCo is likely to accelerate innovation in this space, as more researchers and developers experiment with and extend the approach for different models, tasks, and computing environments. This community-driven development could lead to specialized variants of DiLoCo optimized for different scenarios or hardware configurations.

In Conclusion

DiLoCo represents a significant breakthrough in distributed AI training, offering a solution to one of the most challenging aspects of large-scale language model development. By enabling effective training across poorly connected devices with dramatically reduced communication requirements, DiLoCo opens new possibilities for collaborative and distributed AI development.

The algorithm’s robustness to data distribution variations and changing resource availability makes it particularly valuable in real-world scenarios where perfect conditions cannot be guaranteed. The recent open-source implementation in the form of OpenDiLoCo further democratizes access to these advanced training capabilities, potentially accelerating innovation in AI technologies.

As AI models continue to grow in size and complexity, approaches like DiLoCo that address fundamental scaling challenges will become increasingly important. The ongoing research and development in this area promise to further enhance our ability to train sophisticated AI models efficiently and collaboratively, potentially reshaping the landscape of AI development in the coming years.

Gregory M. Skarsgard is a technology nerd with extensive experience in digital advancements. Schooled in software development and data analysis, he's driven by curiosity and a commitment to staying ahead of tech trends, making him a valuable resource.

Also an AI hobbyist, Greg loves experimenting with AI models and systems. This passion fosters a nuanced perspective, informing his professional work and keeping him at the forefront of AI's transformative potential.